| Framing of a peer review task can significantly impact reviews. In this 3-part study, subtle feature changes in rubrics, task structure, and artifact representation resulted in reviews that were significantly different in both the quality and focus of reviewer feedback. | ||

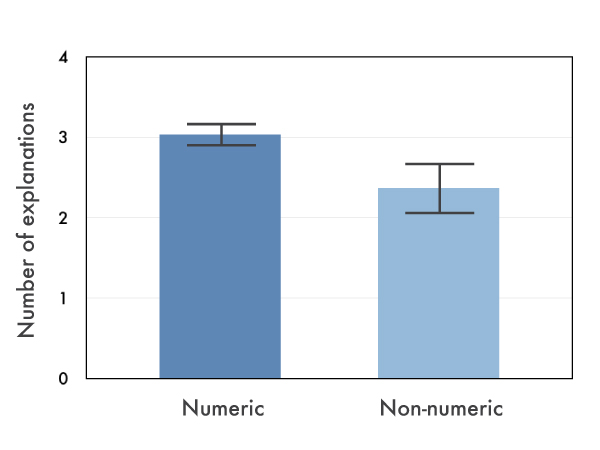

Numeric ratings prompt more explanation |

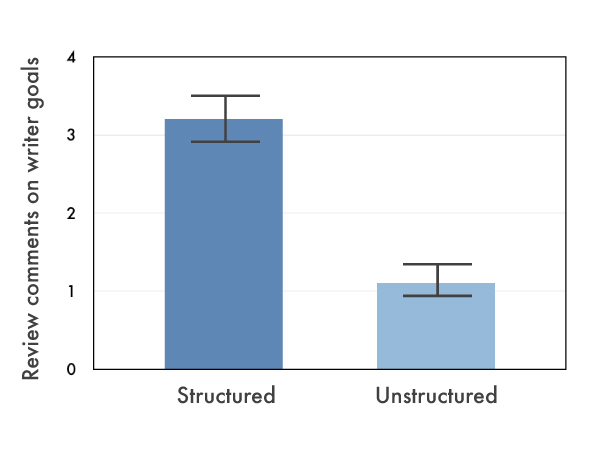

Shorter tasks prompt goal-oriented feedback |

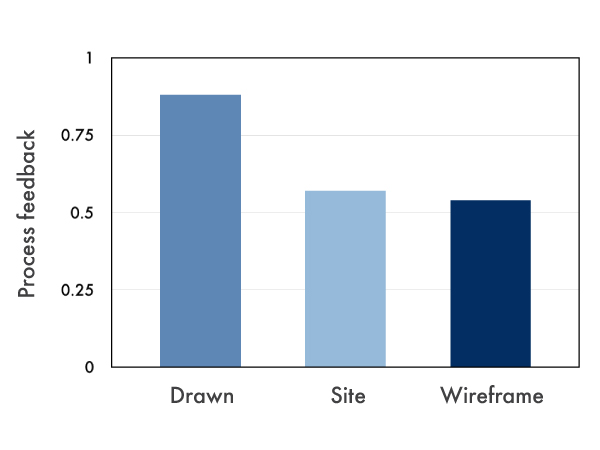

Drafts encourage reviewers to focus on process |